7 Best Meme Coin Trading Tools for 2026

Discover the 7 best meme coins trading platforms and tools for 2026. Get actionable insights, find winning wallets, and trade smarter on CEXs and DEXs.

February 20, 2026

Wallet Finder

February 11, 2026

A security audit service is a professional inspection of your project's code and infrastructure, designed to find hidden weak spots before hackers do. For any DeFi project, this isn't just a good idea—it's essential for building trust and protecting user funds.

Think of it like hiring an independent team to break into a brand-new bank vault. Before that bank can open its doors, it needs solid proof that every lock, wall, and procedure can withstand a sophisticated heist. A security audit provides exactly that for a digital project.

In the world of DeFi, where a single bug can put billions of dollars at risk, this process is the foundation of user safety. It’s what turns the hope of being secure into a verified reality.

The main goal is to find and document security flaws. Auditors act as ethical hackers, meticulously digging into everything from high-level smart contract logic down to individual lines of code. It’s a hands-on approach designed to catch subtle issues that automated scanners almost always miss.

A good audit service doesn't just point out what's broken; it delivers a clear, actionable roadmap for fixing it. The best results come from a real collaboration between the project’s developers and the auditors.

A comprehensive audit examines all of a project's critical assets. Here’s a checklist of what a typical audit scope includes:

An audit is more than a technical check-up; it's a crucial piece of due diligence. For investors and traders, a transparent and solid audit report is a massive green flag. It signals that a project takes security seriously, making it a much safer place to put your capital.

This independent verification gives users the confidence to trust a protocol with their money, elevating a project from a promising idea to a battle-tested platform ready for the real world. That foundation of credibility is essential for long-term success.

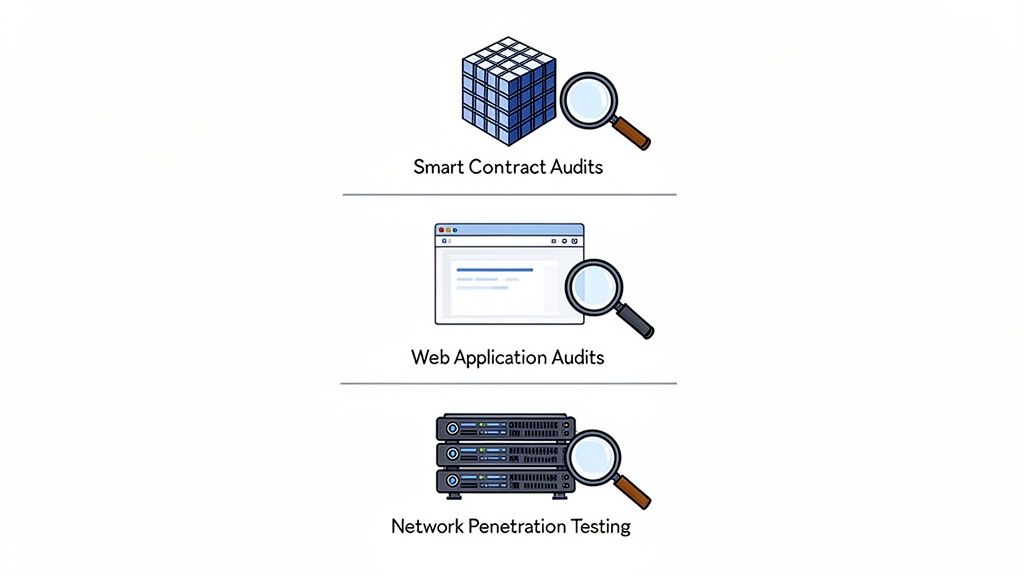

It’s a common mistake to think of a security audit as a single, catch-all service. In reality, a strong security posture is built in layers, with different audits designed to protect specific parts of a project. Each type zeroes in on distinct attack vectors and potential weaknesses.

Knowing these differences is critical. For project teams, it ensures no vital area is left exposed. For investors, it helps verify that a project's "audited" badge actually covers the components that handle user funds and data.

This is the most crucial audit for any DeFi protocol. A smart contract audit is a painstaking, line-by-line manual review of the code's business logic and technical execution.

Auditors look for a wide range of vulnerabilities, including:

Launching without a thorough smart contract audit is like building a bank vault with an untested lock—a massive, unverified risk.

While smart contracts hold the treasure, the web application is the map users follow. A web application audit focuses on the frontend and backend systems that make up the user-facing platform.

A compromised web app can trick users into signing malicious transactions, completely bypassing the security of the smart contract underneath. The user thinks they are approving a simple swap but are actually signing away control of their assets.

In this audit, security experts hunt for common web vulnerabilities like Cross-Site Scripting (XSS), Cross-Site Request Forgery (CSRF), and insecure API endpoints. The goal is to ensure the interface can't be twisted to deceive users or expose their data.

Behind every dApp is a network of servers and infrastructure. A network penetration test, or "pen test," is a simulated cyberattack on this infrastructure. Ethical hackers actively try to breach the project's servers to find and patch weaknesses.

This process can uncover critical vulnerabilities like:

A successful pen test confirms the foundational infrastructure is hardened and ready to fend off external threats.

This table offers a quick comparison of the most common security audit types, their main objectives, and the critical risks they help mitigate for a DeFi project.

Audit TypePrimary FocusKey Risks MitigatedSmart Contract AuditThe on-chain code and business logic that manages assets.Direct theft of funds, logic manipulation, and protocol failure.Web Application AuditThe user interface (UI) and application programming interfaces (APIs).Phishing attacks, data theft, and tricking users into signing malicious transactions.Network Pen TestingThe servers, cloud infrastructure, and databases supporting the application.Server compromise, data breaches, and service downtime from infrastructure attacks.

As you can see, each audit type plays a unique role. Relying on just one creates dangerous blind spots, which is why a multi-layered defense is the only way to build a truly secure protocol.

Most projects treat audits as a checkbox—something to complete right before launch to satisfy investors and users. This fundamentally misunderstands how audits create value and leads to a dangerous timing trap that leaves protocols vulnerable even after receiving a "clean" audit report. The reality is that audit timing relative to your development cycle, launch plans, and TVL growth can be the difference between finding critical vulnerabilities when they're cheap to fix and discovering them after millions in user funds are already at risk.

The most common mistake is the "audit-then-ship" mentality where teams complete their entire codebase, get it audited in the final two weeks before launch, and then rush to mainnet immediately after receiving the report. This creates a catastrophic time crunch where the team has days—not weeks—to implement fixes for any critical findings, often cutting corners or making hasty patches that introduce new vulnerabilities the auditors never reviewed.

Here's what actually happens in most audit timelines. A team completes their protocol development, freezes the codebase, and sends it to auditors with a planned mainnet launch date four weeks away. The audit firm schedules a three-week review, which seems like plenty of buffer time. But then the audit report arrives identifying five critical vulnerabilities, twelve high-severity issues, and twenty medium-severity findings that all need addressing.

Now the team has one week before their announced launch to fix twenty-seven vulnerabilities, re-test all the fixes to ensure they work correctly, and get the auditors to verify the patches in what's called a "remediation review." This is impossible to do properly. The result is either delaying launch—embarrassing and expensive when you've already announced dates to your community—or shipping with acknowledged critical findings, which destroys user trust and creates massive exploit risk.

The smarter approach is building audit milestones into your development cycle long before launch. Conduct a preliminary audit when your core contracts are seventy to eighty percent complete. This "alpha audit" happens while you still have flexibility to make architectural changes based on findings. The auditors identify fundamental design flaws early when fixing them doesn't require tearing everything down and rebuilding.

After implementing fixes from the alpha audit and completing the remaining features, you conduct your final "beta audit" six to eight weeks before the planned launch. This gives you adequate time to address findings properly without rushing. Then, two weeks before launch, the auditors conduct a quick verification review confirming all fixes were implemented correctly. This staged approach transforms audits from a frantic pre-launch scramble into a structured security development lifecycle.

The second major timing mistake is waiting until after significant TVL accumulates to conduct thorough security reviews. Many protocols launch with minimal or rushed audits, planning to do a "proper" audit once they've proven product-market fit and raised enough funding. This is incredibly dangerous because the window between achieving meaningful TVL and getting exploited can be measured in days.

Consider the typical trajectory: a protocol soft-launches with a basic audit of core contracts, market reception is positive, and within two weeks TVL grows from zero to twenty million dollars as farmers and yield chasers pile in. The team, thrilled by the traction, announces they're commissioning a comprehensive audit from a top-tier firm. But that audit won't even start for three weeks due to the firm's backlog, then requires four weeks to complete. You now have seven weeks where twenty million in user funds sits in a protocol with only basic security review.

Attackers specifically target protocols in this vulnerable window. They monitor new launches, identify ones gaining traction, and race to find exploits before the comprehensive audit discovers them. By the time your thorough audit report arrives eight weeks later, the damage is done—either you've been exploited, or you're one of the lucky ones who survived despite the risk.

The solution is reverse-engineering your audit timeline from TVL thresholds rather than launch dates. Set a hard rule: comprehensive security audits must be complete before TVL exceeds specific milestones. For example, no more than one million dollars in TVL without a full smart contract audit from a reputable firm. No more than ten million without multiple independent audits. No more than fifty million without audits plus an active bug bounty program.

This means conducting thorough audits while your TVL is still low, when finding vulnerabilities costs you nothing beyond the audit fee and some development time. Once the audits are complete and critical issues resolved, you can scale TVL aggressively with confidence that you're not just hoping attackers don't notice you before your security review finishes.

The third timing trap that most teams completely miss is audit decay—the reality that an audit report has an expiration date after which its security assurances become increasingly meaningless. The moment you deploy audited code to mainnet, the clock starts ticking on how long that audit remains relevant.

Every code change you make after the audit—every upgrade, every new feature, every "small" parameter adjustment—introduces unaudited code that could contain vulnerabilities. Even if you don't change a single line of code, the security landscape evolves. New attack patterns get discovered, novel exploit techniques emerge, and vulnerabilities that weren't on anyone's radar six months ago become well-known attack vectors today.

A protocol that was thoroughly audited two years ago and hasn't been re-audited since is essentially running on faith, not security. The audit report gathering dust on your website might give users false confidence, but it provides zero protection against modern threats that didn't exist when that audit was conducted.

Smart protocols implement continuous audit cycles, not one-time reviews. Major version upgrades trigger full re-audits before deployment. Minor upgrades and parameter changes go through focused reviews examining just the modified code and its interactions with existing systems. Even if no code changes, protocols with significant TVL should undergo annual re-audits to verify they don't have newly discovered vulnerability patterns and to pressure-test their security assumptions against the current threat landscape.

The cost of continuous auditing is substantial—potentially one hundred thousand to three hundred thousand dollars annually for active protocols with regular upgrades. But this expense is trivial compared to the TVL you're protecting. A protocol managing one billion dollars that spends three hundred thousand annually on security audits is spending 0.03% of TVL on the single most important protection mechanism available. That's not an expense; it's insurance with an absurdly high return on investment.

Here's an uncomfortable truth nobody in the audit industry wants to discuss: not all audit reports are honest representations of a protocol's security. Some teams actively manipulate the audit process or the presentation of results to create the appearance of thorough security review while hiding critical unresolved issues. Understanding these manipulation tactics is essential for investors and users trying to assess whether an audit actually means anything or is just security theater.

The incentive structure creates this problem. Audit firms want repeat business and good relationships with protocol teams who pay their fees. Protocol teams want clean reports that don't scare away users and investors. These aligned incentives can subtly—or not so subtly—compromise the independence and thoroughness of the security review. Learning to spot these red flags protects you from trusting protocols whose "audited" badge is essentially meaningless.

The most common manipulation tactic is carefully crafting the audit scope to exclude the most dangerous parts of the codebase. An audit report might boldly claim "comprehensive security review" while the fine print reveals they only examined two of the protocol's eight core contracts, conveniently skipping the complex bridge logic, the governance system, and the reward distribution mechanism where the real vulnerabilities likely hide.

Teams justify this by claiming certain components weren't ready for audit yet, or that they're using battle-tested libraries that don't need review, or that off-chain components are "out of scope" for a smart contract audit. Sometimes these justifications are legitimate. Often, they're strategic omissions designed to avoid discovering vulnerabilities the team doesn't want to fix.

When reviewing an audit report, the very first thing you should examine is the "Scope of Work" section. This lists exactly which contracts, which specific file paths, and which commit hashes were actually reviewed. Cross-reference this against the protocol's actual deployed contracts on-chain. If major components of the live protocol aren't mentioned anywhere in the audit scope, that's a massive red flag that the audit is incomplete regardless of how clean the audited portions appear.

Pay particular attention to temporal language around scope. If the report says they audited "the token contract and core vault," but the protocol also has a bridge, a DAO, and a complex rewards system, where are those components? If they say "future versions will include these features," that means the current live version is running unaudited code. The audit badge is technically true but practically misleading—like claiming your car is "crash tested" when only the steering wheel was actually tested.

The second major manipulation involves what happens between the audit firm delivering their initial findings and the publication of the final report. Reputable audit processes are transparent about this: the initial report shows all findings, the team fixes critical issues, the auditors verify the fixes work, and the final published report clearly shows which issues were resolved, which were mitigated, which were acknowledged, and includes the team's responses.

Less scrupulous processes involve quiet negotiation where critical findings in the initial private report mysteriously disappear from the final public version. How does this happen? Sometimes auditors are pressured to downgrade severity classifications—a "critical" finding becomes "high," a "high" becomes "medium." The finding is technically still in the report, but buried among less serious issues where investors won't focus on it.

Other times, findings get reclassified as "false positives" without clear justification. The audit firm's initial automated scan flagged a vulnerability, but after discussion with the team, the auditors conclude it wasn't actually exploitable. Maybe this determination is legitimate technical analysis. Maybe it's the team convincing auditors to look the other way. Without access to the working papers and deliberation process, you can't tell the difference.

The cleanest manipulation is simply omitting findings from the public report entirely while fixing them privately. The team addresses critical vulnerabilities discovered in audit, but the final published report never mentions they existed. This creates an artificially clean public record while the team gets the benefit of having actually fixed serious issues. Users and investors see a report with zero critical findings and assume the protocol was perfectly secure from the start, never knowing about the vulnerabilities that were quietly patched.

How do you defend against this? Look for audit reports that explicitly include version tracking. The best reports clearly state "this is the final report after remediation of X critical, Y high, and Z medium findings from our initial review dated [date]." They include a complete history showing what was found, what was fixed, and what the verification process looked like. Reports that appear as single static documents with no history or remediation tracking are suspect—they might be hiding the messy reality of what was actually discovered.

Almost every audit report contains disclaimers explaining the limitations of what was reviewed and what assurances the audit actually provides. These disclaimers are important legal protection for audit firms and important context for users. But their placement and prominence varies dramatically, and this variation is often strategic.

Honest audit reports put key limitations front and center, right in the executive summary. They clearly state "this audit only covers the specified contracts and does not assess economic viability, does not guarantee the absence of vulnerabilities, and does not cover off-chain components or external dependencies." Users reading even just the summary understand the boundaries of what was actually reviewed.

Manipulated reports bury these disclaimers in dense legal language on page twenty-three of a twenty-five-page document, using the smallest font possible and the most obtuse phrasing. The report spends twenty pages creating the impression of comprehensive security coverage, then quietly disclaims all of it in fine print most people will never read.

Pay particular attention to disclaimers about what testing was actually performed. Did the auditors build proof-of-concept exploits to verify vulnerabilities were real, or did they just flag potential issues through static code analysis? Did they test how the protocol behaves when integrated with malicious external contracts, or did they only review isolated contract logic? Did they verify the deployed on-chain code matches the audited code, or just trust the team's claim that it does?

The most dangerous disclaimer to watch for is "this audit assumes the correctness of external dependencies." This means if your protocol interacts with Uniswap, Chainlink, or other external systems, the auditors assumed those systems work perfectly and never checked whether your protocol handles failures or malicious behavior from dependencies correctly. Since external dependency failures are a major source of exploits, this assumption creates a huge blind spot in the security review.

The final manipulation to watch for happens before you even read the specific report—it's about how teams present which firm audited their protocol and what that firm's track record actually shows. A protocol might prominently claim "audited by [Famous Audit Firm]," trading on that firm's reputation to imply thorough security review.

But dig deeper and you might discover that Famous Audit Firm has audited dozens of protocols that subsequently got exploited, sometimes for hundreds of millions in losses. Their brand recognition is high, but their actual security track record is questionable. Meanwhile, lesser-known specialized firms with perfect track records—zero exploits across all audited protocols—don't get the same marketing value despite providing better security assurance.

Some teams take this even further, claiming association with audit firms that barely reviewed their code. "Reviewed by [Top Firm]" might mean the firm conducted a quick preliminary assessment, found multiple critical issues, and declined to complete a full audit because the code was too problematic. The team fixes a few issues, shops the audit to another firm who completes the engagement, but still markets the original famous firm's involvement as if they endorsed the protocol's security.

Before trusting a protocol based on audit pedigree, verify the claim. Go to the audit firm's official website and look at their verified audit reports. Is this protocol listed? Does the report on the firm's site match what the protocol team is sharing? Are there any public statements from the auditors about the engagement? Cross-referencing these details catches misrepresentations where teams overstate the extent or nature of their security review.

Even the best audits can't find everything, which means every protocol needs a crisis response plan for when vulnerabilities get discovered after deployment. How a team handles zero-day discoveries and emergency security incidents tells you more about their actual security posture than the audit reports ever will. Yet most protocols have no formal incident response process, leaving them scrambling when critical threats emerge.

The difference between a protocol that survives a discovered vulnerability and one that gets exploited for tens of millions comes down to response speed and decision-making under pressure. Understanding what good emergency response looks like helps you evaluate which protocols are actually prepared to protect user funds when things go wrong.

The critical window in any security incident is the first six hours after vulnerability discovery. This is when the team must detect the threat, verify it's real and exploitable, assess the potential damage, and make initial containment decisions—all while potentially racing against attackers who may have discovered the same vulnerability independently.

Protocols with mature security operations have monitoring systems that detect unusual activity before exploits succeed. They're watching for abnormal transaction patterns, unexpected contract state changes, rapid fund movements, and other indicators that something is wrong. When these signals trigger, automated alerts notify the security team immediately, not during business hours the next day when damage is already done.

The first decision point is whether to engage circuit breakers or pause functionality. Most modern protocols build emergency pause mechanisms into their contracts, allowing authorized addresses to freeze deposits, withdrawals, or other critical functions when threats are detected. But pause mechanisms create their own risks—users hate having their funds locked, pausing can trigger market panic, and the pause authority itself is a centralization risk that could be abused.

Teams face a brutal trade-off: pause too aggressively and you disrupt legitimate users while potentially crying wolf over false alarms; pause too conservatively and attackers drain the protocol while you're still confirming the vulnerability is real. Protocols with strong incident response have clear playbooks defining exact conditions that trigger pauses, who has authority to make the call, and what communication goes out to users when emergency measures activate.

Once initial containment measures are in place, teams enter the patch development phase where they must fix the vulnerability quickly without introducing new exploits through hasty code changes. This is extraordinarily difficult under pressure—the team is exhausted, users are panicking, and every hour the protocol remains paused costs money and reputation.

The temptation is to implement the fastest possible fix and rush it to deployment. This is almost always a mistake. Hasty security patches frequently contain their own vulnerabilities because they weren't thoroughly reviewed and tested. The cure becomes worse than the disease when a rushed patch opens new attack vectors that weren't present in the original code.

Mature protocols have incident response procedures requiring that even emergency patches go through abbreviated but still rigorous review. At minimum, this means two senior engineers independently reviewing the patch code, automated test suite verification that the patch fixes the vulnerability without breaking existing functionality, and a quick consultation with the protocol's audit firm or a security advisor to sanity-check the fix before deployment.

Some protocols maintain relationships with audit firms that include emergency response retainers. For an annual fee, the audit firm guarantees they'll drop everything and provide same-day review of emergency patches when incidents occur. This professional verification layer prevents teams from deploying dangerous fixes under pressure while still allowing rapid response.

The final critical phase is how the team communicates about the incident and works to restore user trust. This is where most protocols fail catastrophically, either going completely silent while users panic, or releasing misleading information that creates even bigger problems when the full truth eventually emerges.

Good incident communication follows a specific pattern. First, acknowledge the incident immediately without necessarily revealing full details that could help attackers—something like "we've detected unusual activity and activated emergency pause measures while we investigate." This tells users something is happening and the team is responding, without providing a roadmap for exploitation.

Second, provide regular updates on investigation progress even if the updates are just "we're still working on it." Users want to know the team is engaged and making progress, not disappeared into a black hole. These updates should include realistic timelines—if fixing the issue properly will take three days, say that instead of promising resolution in twelve hours and then missing the deadline.

Third, once the vulnerability is fixed and systems are back online, publish a complete post-mortem explaining what happened, why it happened, what the team did to fix it, and what preventive measures are being implemented to ensure this specific class of vulnerability can't happen again. Transparency about failures builds more trust than trying to hide them.

The post-mortem should also address the uncomfortable question of compensation. If user funds were lost or at risk, what is the team doing about it? Are they making users whole from treasury funds? Are they working with insurance providers? Are they offering victims priority allocation in next funding rounds? How the team handles financial impact of security incidents reveals whether they truly prioritize user protection or just their own interests.

What really happens during a security audit? It's not magic—it's a structured, collaborative effort to make your system stronger and safer. Let's walk through the typical six-stage audit process.

Manual review is where auditors find the sneaky, complex vulnerabilities that automated scanners will always miss. It takes a deep understanding of the language (like Solidity) and the attack patterns popping up across DeFi. This human element is what separates a basic check from a rigorous, battle-hardening analysis.

This structured process ensures a thorough, collaborative effort to strengthen your project's defenses from every angle.

Getting a security audit report can be intimidating, but understanding it is critical for developers and investors. This guide will walk you through turning a complicated document into an actionable tool.

The massive growth in this sector tells you how important this has become. The global cybersecurity audit market was valued at USD 14.5 billion recently and is on track to hit USD 39.8 billion by 2032. You can discover more about these market trends and what they mean for the future of security.

A good audit report is built for everyone, from project managers to developers. It starts broad and then dives deep. Here are the main sections you’ll always find:

This process of scoping, reviewing, and reporting is the standard flow that produces the final document.

This structured approach ensures nothing gets missed, moving from setting boundaries to a deep-dive manual review, and finally, crystal-clear documentation of what was found.

Not all bugs are created equal. Auditors sort their findings based on potential damage and exploitability. Understanding these levels is key to assessing a project's true risk.

An audit report is a snapshot in time. It reflects the security of the code at the moment of the audit. Any changes made after the report is published can introduce new, unverified risks.

Here’s a simple breakdown of what each level means, with DeFi-specific examples:

Severity LevelPotential ImpactDeFi ExampleCriticalDirect loss of user funds or protocol insolvency.A reentrancy bug in the main vault contract that allows an attacker to drain all deposited assets.HighSevere disruption of protocol functionality or indirect fund risk.A flaw that allows a malicious admin to freeze all user withdrawals indefinitely.MediumUnexpected or undesirable behavior under specific conditions.A miscalculation in the reward distribution logic that can be exploited to claim slightly more tokens than intended.Low / InformationalMinor deviations from best practices or code style issues.Gas optimization suggestions or the use of an outdated but still-secure library version.

As you read, pay close attention to the status of each finding. Are the critical and high-severity issues marked as "Resolved" or "Mitigated"? If you see "Acknowledged" or "Unresolved," that's a serious warning. It could mean the project team decided not to fix some of the most dangerous threats.

Picking a security audit partner is one of the most important decisions you'll make. It's about finding a team you can trust to find the nastiest flaws in your system. This choice directly impacts user trust, investor confidence, and your project's long-term viability.

A common pitfall is grabbing the cheapest or fastest option. A quick, surface-level audit can easily miss critical vulnerabilities, giving you a false sense of security while leaving user funds exposed.

Use this checklist to evaluate potential partners:

Choosing an audit partner is like selecting a specialized surgeon. You wouldn't ask a heart surgeon to perform brain surgery. Similarly, you need an audit firm with a deep, focused expertise in your specific technology to find the most subtle and dangerous flaws.

The global demand for these thorough services is growing. Asia Pacific is becoming the fastest-growing region for these services, with a projected 11.2% CAGR. You can read more about these global cybersecurity trends to understand the bigger picture.

Making the right choice also means thinking about the long-term health of your code. Our guide on smart contract upgrades and their security risks provides essential context for maintaining security long after an audit is complete.

Two questions always come up first: "How much will it cost?" and "How long will it take?" The answer depends entirely on the unique complexity of your project.

Think of it like inspecting a building. A tiny cabin is a quick job. A sprawling office tower with complex wiring is a different story. The same logic applies to smart contracts.

Several key variables drive the final price tag. Understanding these will help you set a realistic budget.

A security audit isn't a commodity where you just pick the lowest bidder. It's a critical investment in your project's future and your users' safety. The cost reflects the level of expertise required to find flaws that could otherwise lead to catastrophic losses.

This table provides illustrative ranges for security audit costs and durations based on the scope and complexity of the smart contracts being audited.

Project TypeLines of Code (Approx.)Estimated Cost (USD)Estimated TimelineSimple Token (ERC-20/721)100 - 500 LOC$5,000 - $15,0001 - 2 WeeksNFT Marketplace500 - 1,500 LOC$15,000 - $40,0002 - 4 WeeksYield Farming Protocol1,500 - 3,000 LOC$40,000 - $80,0003 - 6 WeeksComplex Lending or DEX3,000+ LOC$80,000 - $250,000+4 - 8+ Weeks

These numbers highlight an important point: a serious security budget is the mark of a professional operation. If a complex DeFi protocol claims a full audit for just a few thousand dollars, the review was likely a surface-level scan—not nearly enough to truly protect user funds.

Even with a clear roadmap, a few key questions always pop up. Let's tackle the most common ones.

This uncomfortable question cuts to the heart of what audits actually provide—they dramatically reduce risk but can never eliminate it completely. Even protocols that undergo multiple rigorous audits from top-tier firms occasionally suffer exploits after launch. When this happens, it's critical to understand why the audit missed the vulnerability and what it means for your assessment of that audit firm's competence.

The most common reason audited protocols get hacked is that the vulnerability existed in code that wasn't included in the audit scope. Many protocols audit their core contracts before launch but then add peripheral contracts or upgrade mechanisms afterward without subjecting them to the same rigorous review. Attackers specifically target these unaudited components, knowing they're likely to contain exploitable flaws. Always verify that the code actually running on-chain matches what was audited, and be extremely cautious about protocols that make significant changes after audit without getting those changes reviewed.

The second common reason is that the vulnerability was genuinely novel and no existing auditor had mental models to detect it. As DeFi evolves, attackers discover new exploit patterns that auditors haven't learned to look for yet. When a truly novel attack succeeds against an audited protocol, the entire audit industry learns from it and updates their review methodologies. This is why audit firms with strong track records matter—they've seen more attack patterns and have better-developed heuristics for finding subtle issues. It's also why protocols should budget for re-audits after major hacks hit other similar protocols, to verify they don't have the same vulnerability.

This is an extremely important question that reveals a massive red flag many people overlook. If an audit firm's public reports consistently show only low-severity findings or informational notes across dozens of audits, while never discovering critical or high-severity vulnerabilities in complex protocols, that firm is almost certainly not conducting rigorous reviews. The reality of DeFi security is that most complex protocols have at least some serious issues—if an auditor never finds them, they're not looking hard enough.

Reputable audit firms with strong track records have public reports containing plenty of critical and high-severity findings that the development teams then fixed before launch. This demonstrates the auditor's competence at finding serious flaws and the development team's commitment to addressing them. If you're evaluating whether to trust a protocol that was audited by a firm you're unfamiliar with, spend an hour reading through that firm's other public reports. If they consistently find only minor issues even in obviously complex protocols, treat their audit as providing minimal security assurance.

This practice is sometimes called "audit shopping"—teams find a firm that will give them a clean report without too many uncomfortable findings, prioritizing the marketing value of "audited by XYZ firm" over actual security improvements. Sophisticated investors specifically look for audit reports with multiple high-severity findings that were subsequently resolved, because this pattern demonstrates both a thorough audit process and a responsive development team. Counterintuitively, a report showing ten resolved critical issues is often a better signal than one showing zero issues found.

Bug bounty programs and audits serve complementary but distinct roles in a comprehensive security strategy, and understanding how they work together helps you build much more robust defenses than relying on either one alone. An audit is a time-bound engagement where a small team of experts examines your code intensively for a fixed period. A bug bounty is an open-ended crowdsourced security review where potentially hundreds or thousands of security researchers can examine your code indefinitely in exchange for rewards when they find issues.

The key advantage of bug bounties is continuous coverage—while an audit examines your code at a specific moment in time, a bug bounty program provides ongoing security review as your protocol evolves, new attack patterns emerge, and more researchers with diverse perspectives examine your system. Bug bounties also scale in a way audits can't—you're effectively getting security review from the global community of white hat hackers, many of whom specialize in finding specific types of vulnerabilities your audit firm might have missed.

The best practice is to complete at least one comprehensive audit before launching any protocol with significant user funds, then establish a bug bounty program immediately at launch offering substantial rewards for critical findings. The audit provides your baseline security confidence, while the bounty program provides continuous monitoring and improvement. For truly critical protocols, the optimal strategy is multiple initial audits followed by an ongoing bug bounty with rewards scaling to the protocol's TVL—platforms managing billions should offer million-dollar bounties for critical vulnerabilities, making it more profitable to report issues than to exploit them.

No, and this is a critical point. An audit is a powerful risk-reduction tool, not a silver bullet for total safety. It dramatically lowers the chance of an exploit by having experts hunt down vulnerabilities at a specific moment in time. A strong security posture pairs a high-quality audit with ongoing defenses like bug bounty programs and active monitoring. Any code you change after the audit introduces fresh, unvetted risk.

Automated tools are fast and can flag common, known vulnerabilities. However, they lack the creative, nuanced thinking of a human expert and will never find complex or business-logic flaws. A manual audit is a deep dive by security engineers trained to think like an attacker. A top-tier security audit service will use both—automated scans to clear the simple stuff, followed by an intensive manual review to find what really matters.

An audit isn't a one-and-done event. It's a crucial milestone in a continuous security lifecycle. The best practice is to re-audit any significant code changes to maintain a hardened defense.

Every project needs a full audit before its first mainnet launch. After that, security is an ongoing commitment. Re-audit your project when you make:

Ready to stop guessing and start tracking the smart money in DeFi? Wallet Finder.ai gives you the tools to discover top-performing wallets, analyze their strategies, and get real-time alerts on their trades. Start your 7-day trial and turn on-chain data into actionable insights at https://www.walletfinder.ai.