Best Wallet Tracker Tools for DeFi Traders in 2026

Discover the best wallet tracker for DeFi in 2026. Compare 12 top tools like Wallet Finder.ai to find, track, and copy smart money wallets.

February 12, 2026

Wallet Finder

February 11, 2026

Think of a website security audit as a deep-dive health check for your site. It's a systematic review of your performance, security posture, and user experience to find vulnerabilities before someone else does. The process is a mix of scoping out your assets, thinking like an attacker, and then running a series of automated and manual tests to strengthen your defenses. It’s a non-negotiable for preventing breaches and keeping your users' trust.

Before you start poking around for vulnerabilities, you need a game plan. Without a clearly defined scope, you'll end up chasing ghosts, wasting time on low-risk areas while potentially huge security holes go unnoticed. This initial planning phase is all about making sure your audit is focused, efficient, and tailored to the actual risks your project faces.

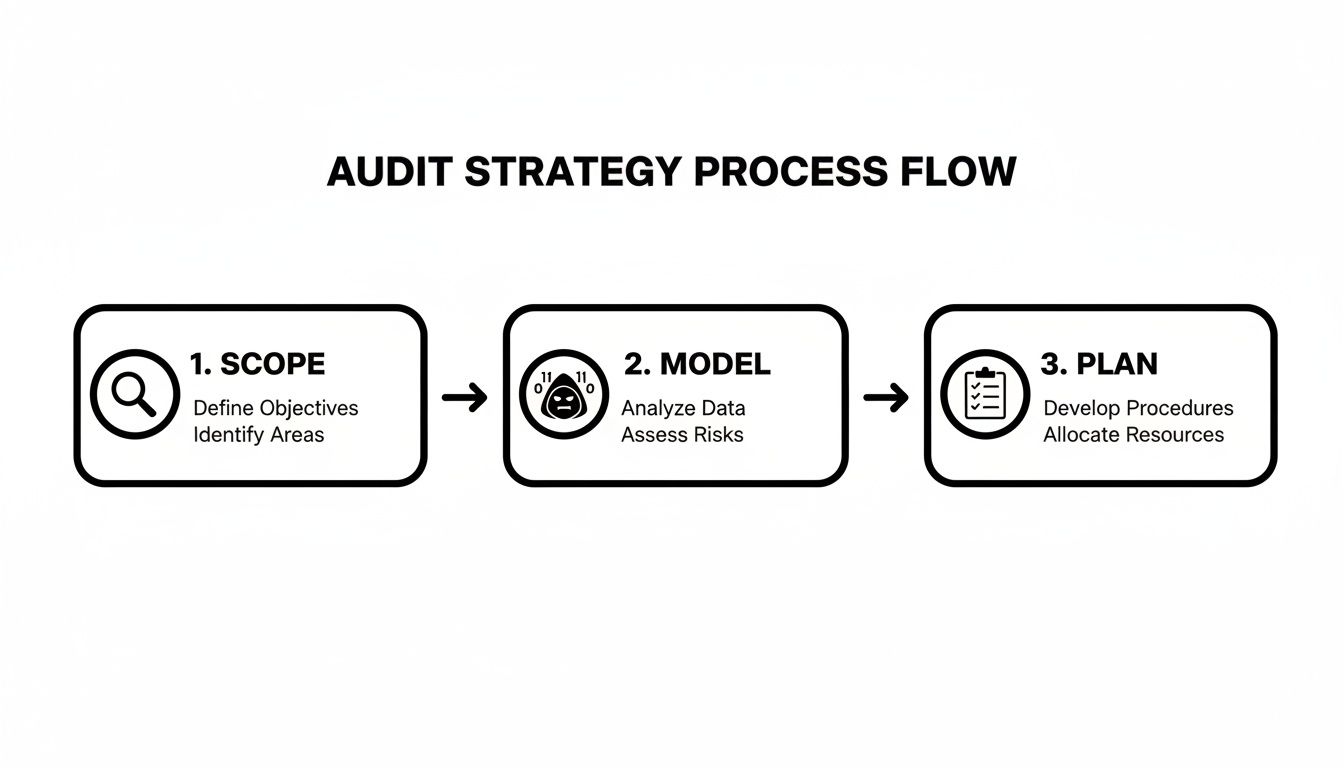

This whole strategic process can be broken down into three core phases.

First, you scope out what you need to protect. Then, you model the threats that could target those assets. Finally, you plan your testing approach based on that intelligence.

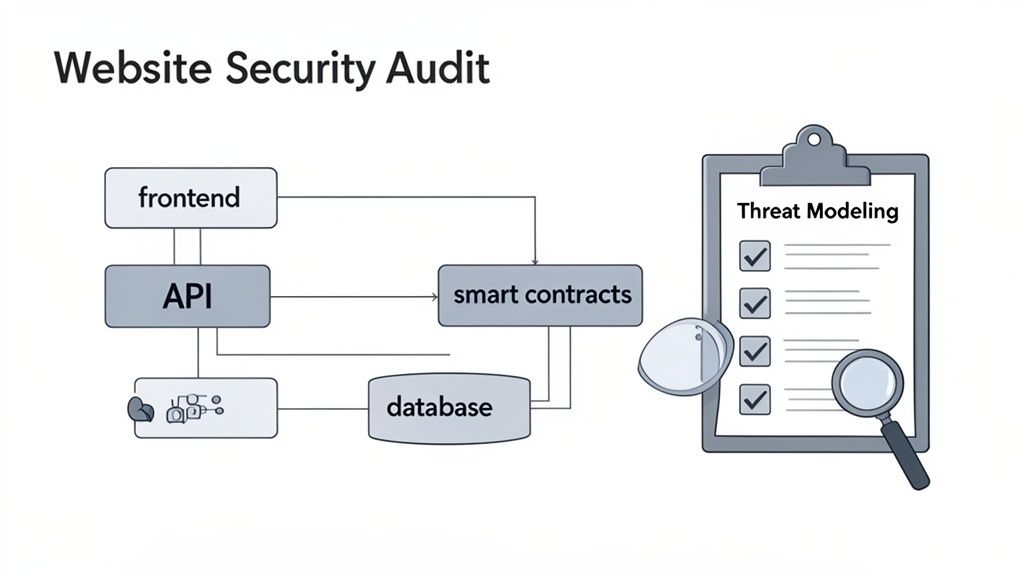

Your first move is to create a complete inventory of every single component that makes up your digital world. Simple, right? But you can't protect what you don't know you have. This mapping exercise is especially critical for Web3 and DeFi platforms, where the attack surface is often much wider than a standard website.

Here is an actionable checklist to ensure your asset inventory is exhaustive:

I've seen it happen time and time again: a forgotten subdomain or a legacy API endpoint becomes the entry point for an attack. These neglected assets are goldmines for hackers because they're often unpatched and unmonitored.

Okay, once you have a crystal-clear picture of your assets, it’s time to put on your black hat. Threat modeling is all about thinking like an attacker to figure out how they might try to break your system. You're moving beyond generic vulnerability checklists and identifying specific, realistic attack scenarios that apply directly to your app.

Let's say you're running a DeFi platform that tracks wallet performance. Here are some threat scenarios to model:

Working through these "what-if" scenarios helps you focus your testing on the risks that could actually do some damage. If you want to see how this plays out in the real world, you can check out different security auditing service offerings to see how they approach threat modeling for various platforms.

This strategic foundation—built on a detailed scope and a targeted threat model—is what turns a generic audit into a powerful defense plan built specifically for your project.

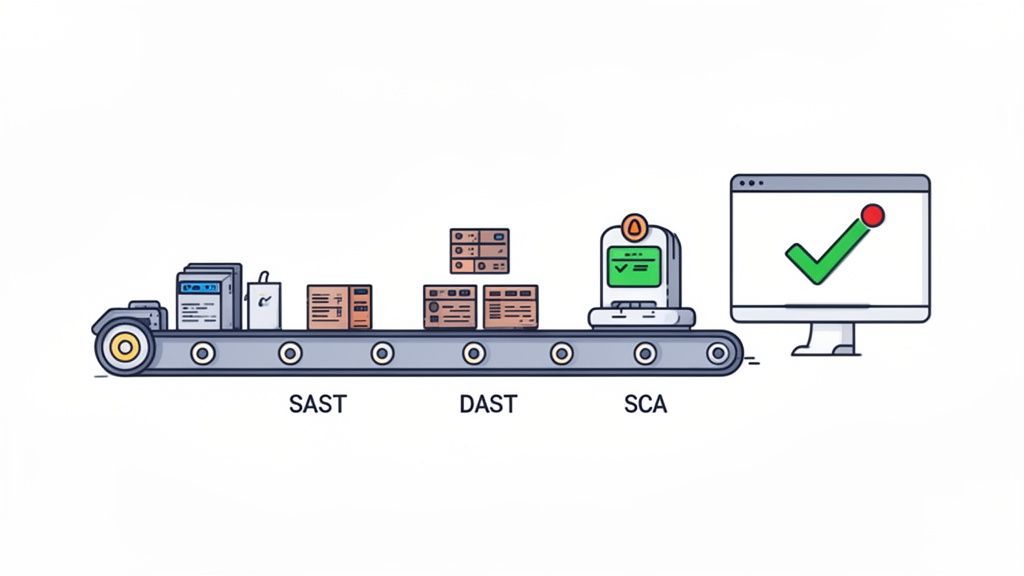

Automated tools are your first line of defense in any serious security audit. Think of them as a force multiplier, giving you the power to rapidly scan your entire tech stack for the common vulnerabilities and misconfigurations that attackers just love to find. They're like tireless sentinels, constantly on the lookout for low-hanging fruit.

These tools are absolutely essential for getting broad coverage fast—something that would take a human tester weeks to replicate. By running them early in your audit, you can knock out a huge chunk of issues before a manual penetration tester even starts. This frees up your human experts to focus their brainpower on complex, business-logic flaws that scanners simply can't find.

Static Application Security Testing (SAST) is like having a security expert proofread your source code line by line. Before your application is even compiled, SAST tools dig into the raw code, searching for insecure patterns. It’s a "white-box" approach that provides an incredible level of detail into your application's guts.

For instance, a SAST tool can spot a potential SQL injection vulnerability by tracing how user input snakes its way through your code until it hits a database query. It flags the problem right in the developer's IDE, long before that code ever sees a live server.

The biggest wins with SAST are:

If SAST is about inspecting the blueprints, Dynamic Application Security Testing (DAST) is about trying to break into the finished building. DAST tools interact with your running web application from the outside, mimicking how a real attacker would approach it. Since it’s a "black-box" technique, it doesn't need any access to your source code.

These scanners actively poke and prod your live site, throwing malicious payloads at it to check for things like Cross-Site Scripting (XSS), command injection, and bad server configurations. A DAST tool might try to inject a script into a contact form, then check to see if that script executes on the confirmation page—a classic sign of an XSS flaw.

Because DAST tools test your application in its live environment, they excel at finding configuration slip-ups and runtime issues that SAST scanners are blind to. You really need both to get a complete picture of your security posture.

Let's be honest, modern applications are mostly assembled, not built from scratch. They rely on countless open-source libraries and third-party dependencies. In fact, a huge portion of the code in most new applications comes from these external sources, and every single one is a potential security risk.

Software Composition Analysis (SCA) tools automate the tedious job of identifying every open-source component in your project. They then cross-reference that list against massive public databases of known vulnerabilities (like CVEs). This is critical for any project, but it’s a non-negotiable for DeFi platforms that might lean on libraries handling interactions with blockchains like Ethereum or Solana. One tiny vulnerability in a third-party wallet library could bring down your entire platform.

Picking the right combination of these tools is a crucial first step. If you're looking for some direction, our guide on security auditing software dives deeper into the specific tools available.

Ultimately, the goal is to weave these scanners directly into your CI/CD pipeline. This creates an automated security feedback loop, making sure every single code change gets vetted. Security becomes a natural part of your development flow, not a painful step you tack on at the end.

Automated scanners are great for catching the low-hanging fruit—the common, well-known vulnerabilities. But they're just one piece of the puzzle. The real difference-maker in a security audit is the manual penetration test, where a human expert thinks like an attacker to find complex flaws that scanners are completely blind to.

This is where context and creativity come into play. A scanner might flag an outdated library, sure. But a pen tester can figure out if that vulnerability is actually exploitable within your app's unique logic and, more importantly, what the real business impact would be.

Business logic vulnerabilities are some of the trickiest to find. They aren't simple coding mistakes; they're flaws in the very workflow of your application. Automated tools almost always miss them because they can't understand intent. This is where a manual tester’s intuition is invaluable.

Think about a DeFi staking platform. An automated scanner just sees a function to stake tokens. A human tester, on the other hand, starts asking creative, probing questions:

These aren’t typical bugs. They’re logical loopholes. A tester might simulate a "race condition" by firing off multiple withdrawal requests at the exact same time, hoping to overwhelm the system's checks and bypass its limits—a classic attack that tools can't replicate.

Another area where manual testing is absolutely critical is in your authentication and session management systems. Scanners can spot basic configuration errors, but they have no idea how well your access controls hold up against a persistent attacker trying to climb the privilege ladder.

A manual tester will actively try to break these controls. For instance, they might log in as a regular user and then try to directly access an admin-only URL like /admin/dashboard. If the server returns anything other than a firm "Access Denied" error, it's a sign of a serious vulnerability called an Insecure Direct Object Reference (IDOR).

They'll also dig deep into how you manage sessions by:

A successful session hijacking is a total compromise. The attacker effectively becomes that user, gaining full access to their data and permissions. This is why meticulous manual testing of these core functions is absolutely non-negotiable.

For Web3 projects, the stakes are astronomical. A single smart contract vulnerability can lead to immediate and irreversible financial loss. While automated tools can spot some common anti-patterns, manual code review is the only way to find nuanced, protocol-specific flaws.

Testers will focus intensely on attack vectors unique to the blockchain. They'll hunt for things like reentrancy attacks, where a malicious contract can repeatedly call a function to drain funds before the first transaction even finishes. They'll also check for integer overflows and underflows, where a simple math error can lead to disastrous, unexpected outcomes. To get a better handle on this, check out our deep dive into smart contract security.

The threat landscape is constantly evolving, and audits have to keep up. Recent data shows that a staggering 97% of companies report problems stemming from GenAI, while 78% of attacks now target post-authentication APIs. For financial firms, the average time to just identify a breach is 177 days, with another 56 days needed to contain it. In the crypto world, that kind of delay is unthinkable. You can find more of these cybersecurity compliance statistics over on CyberArrow.io.

Ultimately, manual penetration testing gives you the human intelligence needed to truly validate your security. It’s the only way to confirm that your core functions are safe from clever manipulation, providing a level of assurance that automated tools simply can't match.

So, your scanners have finished, and you have a long list of potential vulnerabilities. Don't pop the champagne just yet. Uncovering this raw data is just the beginning; the real work starts now.

The next step is turning that data into an actionable security roadmap. Because not every finding carries the same weight, treating them all equally is a surefire way to burn out your team and waste resources on ghosts. Your first goal is to validate every single threat to filter out the noise. Automated scanners are notorious for spitting out false positives—warnings that look scary but aren't actually exploitable in your specific setup. Then, you prioritize the real issues, so your team can focus on fixing what truly matters.

Before you jump into fixing things, you have to confirm that a potential vulnerability is a genuine threat. This validation process is where you combine the automated output from your tools with the hands-on, contextual understanding you gained during manual penetration testing.

Imagine a scanner flags a dependency for a critical vulnerability. That’s the starting point. Validation means asking the right follow-up questions:

This manual verification is where expertise shines. You’re separating the signal from the noise and creating a clean, confirmed list of issues that demand attention.

Once you have a list of confirmed vulnerabilities, you need a consistent way to measure how bad they are. This is where a standardized framework like the Common Vulnerability Scoring System (CVSS) becomes essential. CVSS gives you an objective score from 0 to 10 based on factors like attack complexity, user interaction required, and its impact on confidentiality, integrity, and availability.

But a raw CVSS score is just one piece of the puzzle. The true risk is always relative to your specific business context.

A medium-severity Cross-Site Scripting (XSS) flaw on a static marketing page is an annoyance. But that exact same flaw inside the admin panel of a DeFi platform—where it could steal session cookies and drain wallets—is a five-alarm fire. You have to adapt the score to your environment.

For Web3 and DeFi projects, this is non-negotiable. Any vulnerability that could touch user funds, manipulate on-chain data, or expose private keys should automatically be bumped to a higher severity level, no matter what its base CVSS score says.

With your context-adjusted scores ready, it's time to build a simple but incredibly powerful tool: a risk prioritization matrix. This grid helps you visually map each vulnerability based on its likelihood of being exploited and its potential business impact. It immediately clarifies where your team should be spending its time and energy.

This matrix helps teams decide what to fix first by mapping the likelihood of an attack against its potential damage.

Ultimately, this structured process is the bridge between finding problems and actually fixing them. It transforms a daunting audit report into a clear, prioritized, and actionable roadmap that guides your security resources to protect what matters most.

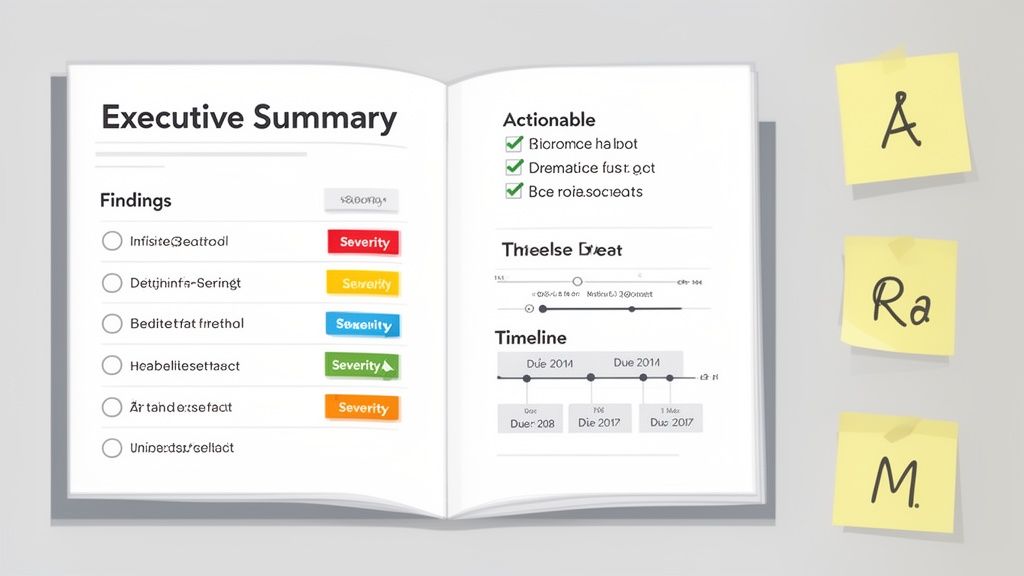

A security audit isn't finished when you find the last bug. Honestly, that's the easy part. The real value is in turning those technical findings into a clear, actionable plan that actually makes the project more secure. A report that just dumps a list of vulnerabilities on your team is just noise; a report that guides them to a solution is priceless.

The trick is to create a single document that speaks to two very different audiences. Your developers need the nitty-gritty details, like proof-of-concept code and specific lines to fix. At the same time, your executive team needs a high-level summary that cuts through the jargon and explains the business risk in plain English.

A great report bridges the gap between finding a problem and fixing it. It needs a logical flow, starting with the big picture and then drilling down into the specifics for the folks who'll be in the trenches doing the work. This way, every stakeholder can get what they need without getting bogged down.

Here’s a structure that works for both audiences:

For every bug you find, your report has to give a developer everything they need to understand, replicate, and fix it. Ambiguity is the enemy of action here. Using a standardized format for each finding keeps things clear and consistent.

The most effective reports tell a complete story for each bug. They don't just say "XSS vulnerability found." They show exactly how it was exploited, what data was exposed, and why it's a real threat to the business or its users.

Use a template like this for documenting each issue:

Once all the findings are documented, the next move is creating a master remediation plan. This is more than just a list of fixes; it's a project management tool. The plan has to assign clear ownership and establish realistic deadlines. Without that accountability, critical risks can slip through the cracks.

This is also where you tackle systemic problems. For example, if you see a pattern of issues stemming from insecure third-party scripts, you address it here. It's a bigger deal than you might think. One analysis found a staggering 64% of third-party apps on major sites were accessing sensitive user data they didn't need. These external scripts can quietly expose credentials and financial details, making them a huge priority. You can dig into this web exposure risk more in the research conducted by Reflectiz.

Finally, the audit cycle isn't truly over until you've verified the fixes. After your team has pushed the patches, you absolutely must conduct re-testing. This is a focused effort, looking only at the vulnerabilities that were previously discovered to confirm the fix works and didn't accidentally break something else.

This final step is non-negotiable. It’s the ultimate proof that your website’s security has actually improved, officially closing the loop on the audit and justifying the entire effort.

Even with a detailed plan, jumping into security audits for the first time can feel a little murky. Questions about timing, scope, and cost always come up, and getting these details right is the key to building a security program that actually works for you.

Let’s clear up a few of the most common questions I hear. This should give you the clarity you need to plan and budget effectively, making sure your security efforts are a sustainable habit, not just a one-off panic.

This really boils down to your specific risk profile and how quickly your project evolves. For most traditional web businesses, a comprehensive audit annually is a solid baseline. It’s your yearly check-up to take a deep look at your defenses and catch anything that might have slipped through the cracks.

But the game is entirely different for high-stakes projects.

Here’s how I think about it: your annual or quarterly audit is like seeing a specialist for a deep diagnosis. The continuous, automated scanning you build into your CI/CD pipeline? That's your daily health monitoring. You absolutely need both to get a complete picture of your security posture.

This is a big one. People use these terms interchangeably all the time, but they are worlds apart. Understanding the difference is crucial for spending your security budget wisely.

Think of it like getting a house inspected.

A vulnerability scan is the home inspector running down a checklist. They're looking for known, common problems: "the wiring looks old," "there's a crack in the foundation." It's automated, it's fast, and it gives you a broad overview of potential issues based on a database of known problems. It answers the question, "What might be wrong?"

A penetration test (or pen test) is when you hire someone to actually try and break in. They're not just looking at the locks; they're trying to pick them. They'll jiggle the windows, look for unlocked doors, and see if they can bypass the alarm. It’s a manual, creative, and deep process that confirms which of those potential issues are actually exploitable. A pen test answers the question, "What is definitively broken and how bad is the damage?"

A real website security audit needs both. The scan handles the low-hanging fruit and gives you broad coverage, while the pen test uncovers the complex, logic-based flaws that an automated tool could never understand.

There's no simple answer here—the cost of a security audit can vary wildly depending on the project's scope and complexity. A quick audit for a small, static marketing site might only run you a few thousand dollars.

On the flip side, a comprehensive audit for a complex DeFi platform with multiple smart contracts, intricate APIs, and a massive user base can easily climb into the tens of thousands of dollars or more.

The main things that drive the cost are:

It's crucial to see this as an investment, not an expense. The cost of a thorough audit is a tiny fraction of what a real breach could cost you in lost funds, user trust, and brand reputation.

Ready to turn on-chain data into your next winning trade? Discover profitable wallets and mirror their strategies in real-time with Wallet Finder.ai. Start your 7-day trial and gain the edge you need to act ahead of the market. Find out more at Wallet Finder.ai.